|

I am a machine learning research scientist at DynamoAI, working on developing compliant AI solutions for enterprises. I completed my PhD at the Machine Learning Department of Carnegie Mellon University, where I was advised by Ameet Talwalkar. My research focused on methods that can assist our understanding of complex machine learning models, and their application to useful downstream tasks involving human users. Prior to my graduate studies, I received a Bachelors of Science degree in Computer Science at Caltech. |

|

|

|

|

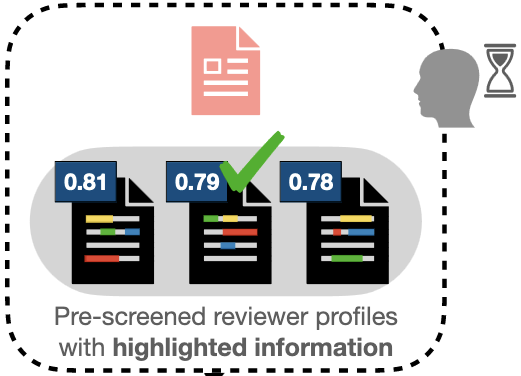

We conduct a crowdsourced study to test different methods that can be useful for human decision makers in document matching, a practial application including academic peer review. |

|

We study the problem of offering algorithmic recourse without requiring full transparency about the model and how a decision maker can incentivize mutually beneficial action to the decision subjects. |

|

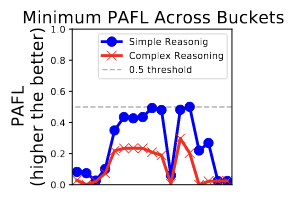

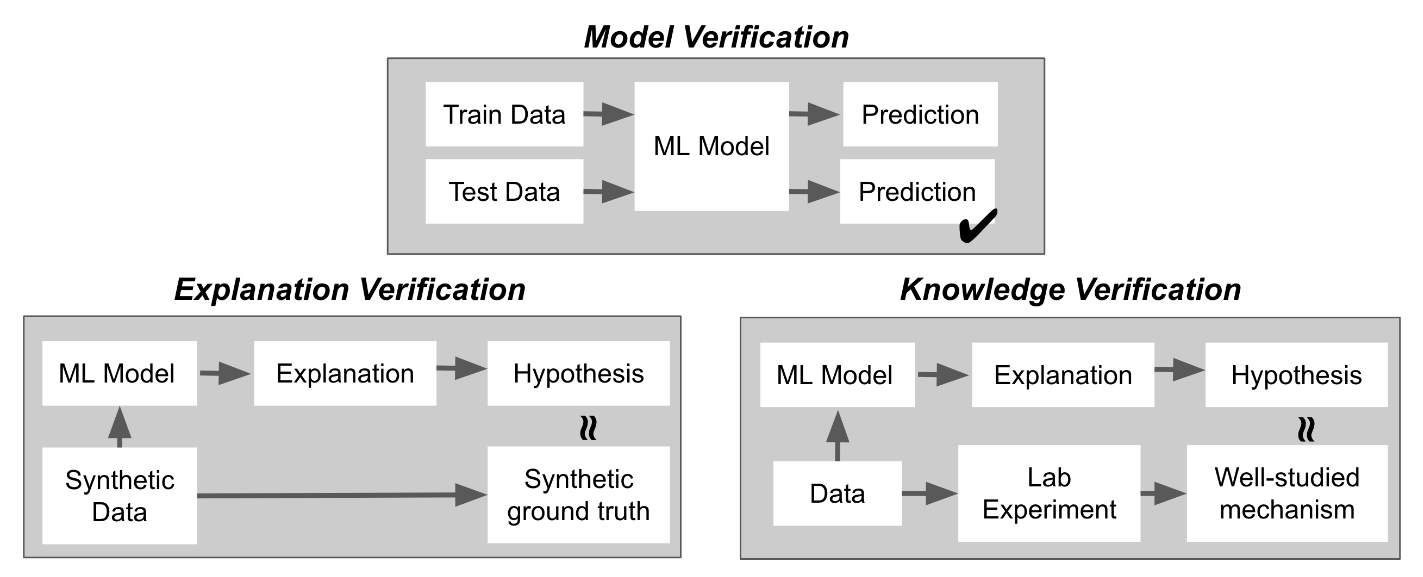

We propose a benchmark study on the limitations of leading saliency methods via several stylized tests of identifying correct sets of features based on different model reasoning. |

|

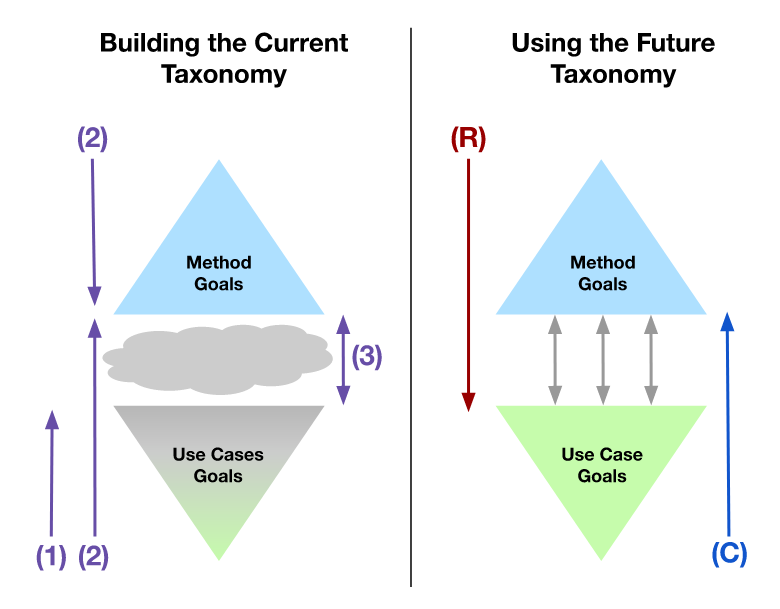

We survey the current landscape of a field of interpretable machine learning and ways to concretely address existing issues and disconnects. |

|

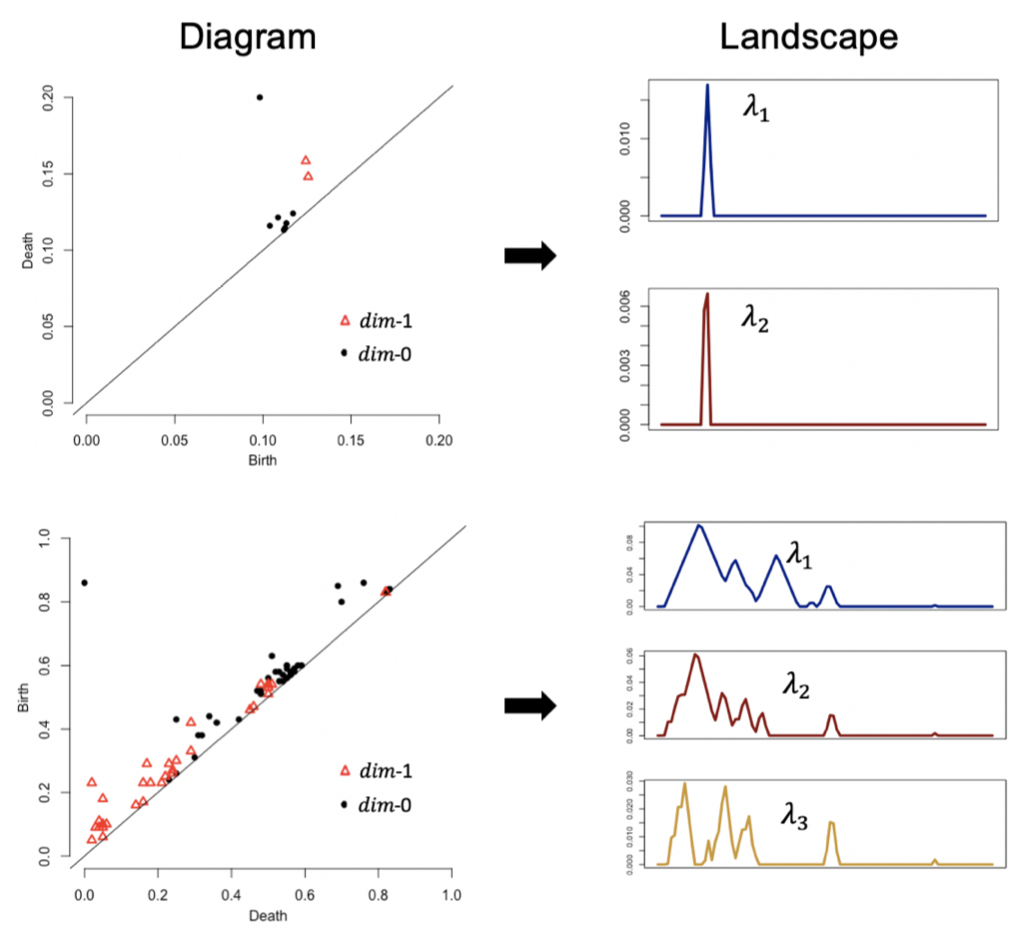

We introduce a novel topological layer for deep neural networks based on persistent landscapes, which is able to efficiently capture underlying toplogical feautres of the input data, with stronger stability guarantees. |

|

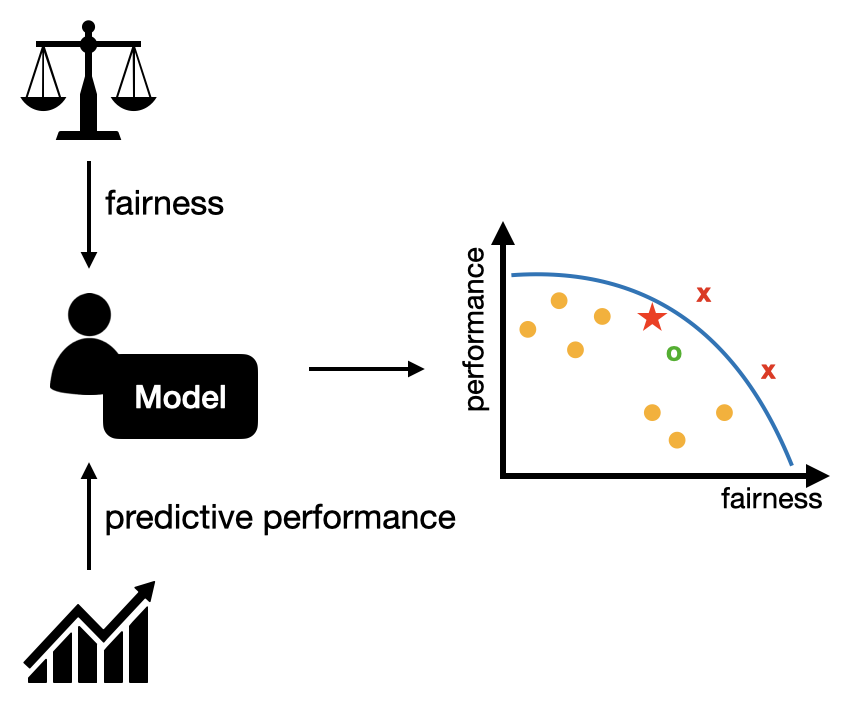

We proposed a general framework of understanding and diagnosing different types of trade-offs in group fairness, deriving new incompatibility conditions and post-processing method for fair classification. |

|

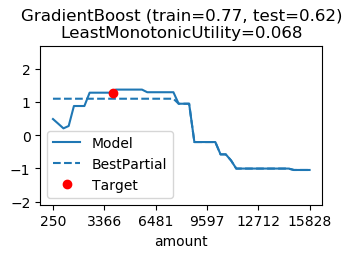

We introduce a framework for automating a search for a partial dependence plot which best captures certain types of model behaviors, encoded with customizable utility functions. |

|

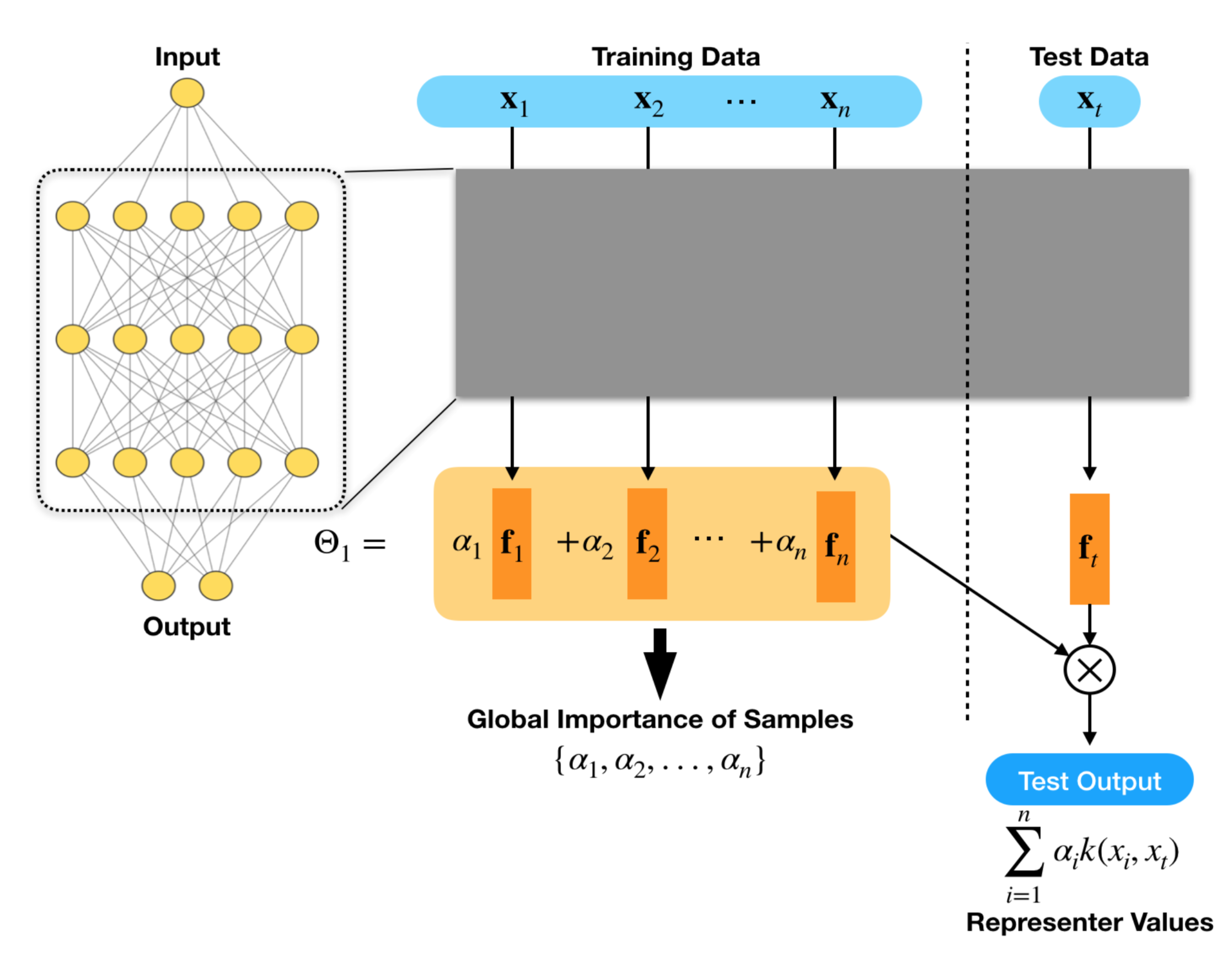

We propose a method that decomposes a deep neural network prediction into a linear combination of activation values of training points, in which the weights (called representer values) allow intuitive interpreation of the prediction. |

|

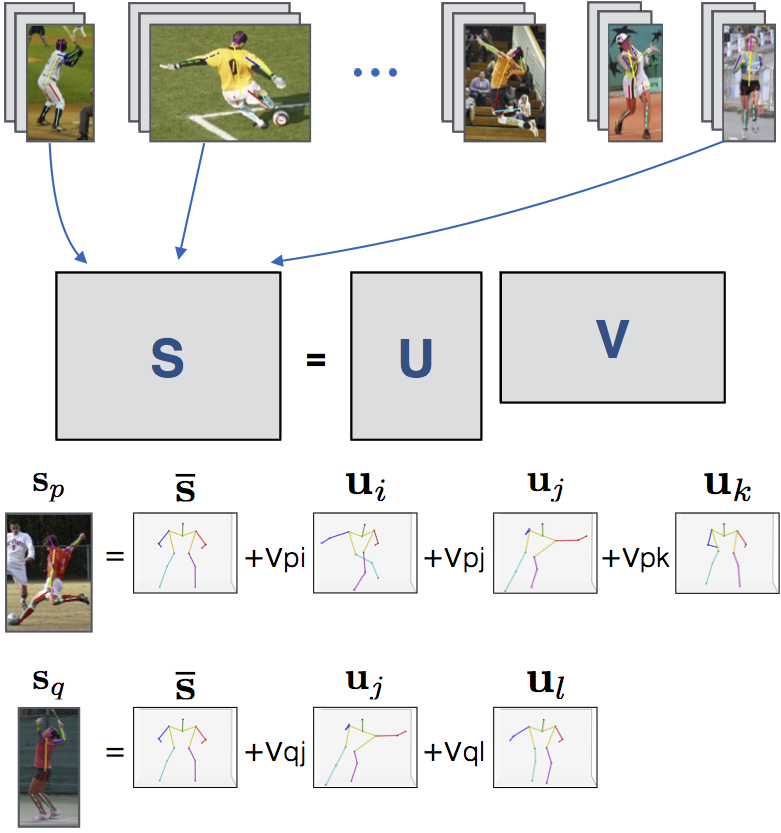

We propose a method to discover a set of rotation-invariant 3-D basis poses that can characterize the manifold of primitive human motions, from a training set of 2-D projected poses obtained from still images taken at various camera angles. |

|

|

|

|

We suggest workflows on the best practices for using existing Interpretable Machine Learning methods for tasks in computational biology. |

|

|

|

|